A Head of Digital Innovation at a major luxury brand said it bluntly:

“Our IT operations now feel like they’ve become creative operations - sitting around crafting prompts just to get the images and videos marketing needs. It’s a massive time sink. People are sick of prompting.”

This isn’t a “bad model” story. It’s the gap between sky-high expectations and the maturity of the enterprise deployment required to make generative AI (Gen Ai) repeatable, auditable, and on-brand for marketing visuals. The first demo looks like magic; the tenth asset of the day - produced at scale, with consistent quality, brand compliance, and clean handoffs - reveals the truth: today’s Gen AI stacks aren’t ready.

Adoption Is High. Transformation Is Hard.

The numbers show real momentum. In McKinsey’s 2024 survey, 65% of respondents said their organizations were regularly using generative AI, and overall AI adoption jumped to 72%. Many reported 1–4 months from start to production for gen-AI capabilities - fast by enterprise standards. Yet only a small subset can attribute a meaningful share of EBIT to gen AI today.

A year later, McKinsey’s 2025 update is even more direct: more than 80% of organizations say they haven’t seen a tangible enterprise-level EBIT impact from gen AI yet, and fewer than 1 in 5 are even tracking well-defined KPIs for gen-AI solutions. Translation: adoption ≠ value; value requires rewiring processes, not just dropping an AI model into an old workflow.

BCG’s global research echoes this: 74% of companies struggle to achieve and scale value, while only 26% possess the capabilities to move beyond PoCs. Critically, 62% of AI value realized so far sits in core functions—operations (23%), sales & marketing (20%), and R&D (13%)—where compliance, process control, and repeatability matter most.

The Repeatability Gap: One Demo ≠ Ten Per Day

Producing one great, on-brand product video or a lifestyle image is a pilot. Producing 10 per day, 4 days a week, with predictable latency and consistent brand guidelines - that’s production. At that scale, small cracks become outages: version drift between generated assets and metadata, inconsistent color/crop rules, missing legal copy, broken visuals, and approvals that leak into Slack DMs.

Deloitte’s year-end view of 2024 captures the slowdown: over 2/3 of CXOs/business leaders expect 30% or fewer POCs to be fully scaled within 3–6 months, and 55–70% say it will take 12+ months to resolve ROI/adoption challenges (governance, data, training, trust). The tech can sprint; organizations move at the speed of compliance.

Output Formats: Don’t Break the Chain

Enterprises already run on a visual format “contract” - an unwritten rule on how teams exchange design files. For example, a global creative team may output layered banner design files, such as a TIFF/PSD that regional teams must localize. If a GenAI pilot starts producing only flattened MP4s or non-layered images (JPG/PNG), downstream localization and QA grind to a halt. You’ve created a chicken-and-egg adoption trap: either every region’s team switches tools (slow, risky), or the pilot quietly dies.

The lesson: during transition, respect existing format contracts. Emit interoperable, editable outputs (e.g., layered files where needed) so teams can keep moving while you modernize the rails underfoot.

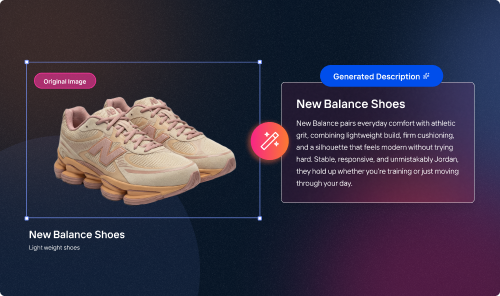

The Hidden Plumbing: Product Truth, Automatic Prompts, Brand Rules

Repeatable, compliant outcomes need three inputs wired into the AI/MLops flow:

- Product truth - PDP details, taxonomies & product attributes.

- Automatic prompts tied to that data - so you’re not relying on humans to remember every rule and detail, every single run.

- Encoded brand compliance rules - typography, color, crop logic, brand voice, disclaimers, and rights windows as policy, not tribal knowledge.

McKinsey notes that even among high performers, data governance and integration top the list of challenges in capturing value. IDC’s predictions likewise emphasize governance and outcomes: by 2026, 70% of platform providers will bundle gen-AI safety and governance; and in their 2023 survey, 19% already saw gen-AI disrupt operating models, with 18% expecting significant impact within 18 months.

Why Point Tools Stall

Single-point GenAI tools look great in isolation, but they multiply silos: edit in one AI tool, upscaling in another, banners/videos elsewhere. Humans become the glue that pastes outputs together, and that glue doesn’t scale. Audit trails fragment; SLAs slip; costs creep. Unsurprisingly, BCG finds most companies stuck before repeatable value.

Additionally, the lack of central orchestration means every handoff increases opportunities for miscommunication and errors, making it harder to enforce consistent governance and quality control. Fragmented AI workflows often require manual reconciliation between systems, eating up time and introducing data version issues. These silos restrict holistic visibility across asset pipelines, causing compliance gaps to go unnoticed until late in the process. As teams juggle multiple specialized tools, training, and onboarding become more complicated, slowing down adoption and reducing overall productivity.

What “Good” Looks Like: A Brand-Compliance Platform

The goal isn’t shiny new marketing assets - it’s repeatable, compliant outcomes that flow through the enterprise without breaking format contracts or burning out teams.

- Asset Identity

- Every creative output - image, video, or variant - gets a stable asset ID that lives in (and is resolvable through) your DAM/PIM, not just a folder path. That ID is the anchor for everything that follows.

- Product Truth at the Edge

- On-demand PDP product taxonomy/metadata lookup with validation.

- Prevents mismatches (eg, wrong image, out-of-sync product description) before listing publishes.

- Policy-as-Code for Brand Compliance

- Brand rules (colors, font stacks, crop logic, safety/legal lines), brand voice, and geographic compliance are codified as runtime checks.

- Outputs that violate brand policy fail fast and route to human review.

- Event-Driven Orchestration

- An event bus with idempotent handlers and dead-letter queues; AI agents act on events (new PDP listing or SKU, campaign brief, price change) versus manual prompts.

- Enables agentic AI to operate within guardrails (scoped authority, rollback).

- In-Tool UX (Keep People in the Flow)

- Marketers stay in Shopify/Salesforce Commerce, etc, Designers stay in Photoshop/Figma/AE; AI runs in the background.

- No forced context switching; approvals and QA status appear where people work.

- Output-Format Continuity

- Honor format contracts (e.g., layered files for localization) during rollout.

- Add new outputs without removing the ones downstream teams depend on.

- Observability & SLA Management

- Capture end-to-end lineage per asset: inputs (prompt/data) → generated version → approvals → publish.

- Dashboards for P50/P95 latency, exception rates, rework %, and policy violations.

These principles stitch together the brand-compliance surface, the rails that let GenAI go fast and stay on brand.

Engineer for Reality: Budgets, SLAs, Failure Modes

Treat the Gen AI workflow like a distributed system:

- Latency budgets per step (generate → QA → enhance → publish). Track P95, not just averages. If P95 blows past the SLA, precompute common variants or sizes or run a batch automation asynchronously

- Degraded modes: retries, fallbacks to smaller/cheaper AI models, and human-in-the-loop checkpoints on policy violations.

- Rollback: if a policy check or rights window fails post-publish, yank the asset via ID from wherever it's published - don’t hunt files by name.

McKinsey’s 2025 guidance is clear: organizations that are capturing value have rewired processes and instituted measurement and governance (KPIs, change roadmaps, leadership engagement). Do the same for creative ops

Agentic AI That Respects the Enterprise

Agents shouldn’t be free-form chat macros; they should be stateful workers operating on events and brand-compliance policies:

- Task graph: ingest brief → generate variants → apply crop/brand policy → validate PDP rules → compliance check → tag → notify approvers → publish → record lineage.

- Scoped authority: agents can transform drafts, but require a human checkpoint to publish net-new brand assets

- Safe by default: when a brand guideline checks fail, route to a queue with context, not a mystery error.

Deloitte notes the rise of agentic AI as interest grows, but warns there’s no silver bullet; the rails still matter.

Change Path: From Pilot to Production - Without Boiling the Ocean

- Pick Two High-Volume Marketing Asset Workflows

- Example: PDP image edits and campaign banner generation. Choose flows with clear format contracts and measurable SLAs.

- Shadow Mode (2–4 Weeks)

- Run the platform in parallel. Emit the existing formats so downstream teams aren’t forced to change tools. Compare outcomes on quality, latency, exception rate.

- Gate with Policy + SLA

- Turn on policy-as-code for brand compliance. Instrument P95, exception types, and rework %. Publish dashboards to stakeholders.

- Tighten, Then Expand

- Stabilize before you spread. Cut overlapping AI tools, prove the flow end-to-end with an overarching AI brand compliance tool, then broaden to adjacent tasks - keeping output-format continuity for seamless handoffs.

- Institutionalize Brand Compliance

- Treat brand rules as code with versioning and review. Create a small “brand platform” squad accountable for entering the brand rules and format contracts.

BCG’s data says value pools concentrate on core functions; start there and make the wins boringly repeatable.

Tame the Wild Horse

GenAI isn’t failing you; immature brand guardrails are. Adoption is high, but enterprise-level value follows discipline: stable asset identity and lineage, data truth at the edge, policy-as-code for brand compliance, event-driven orchestration, in-tool UX, output-format continuity, and measured SLAs. Build the brand-compliance surface, and the rest of the story - speed, scale, savings - writes itself.